Conceptualizing the future with science fiction

Hello!

This is letter #3 of Wordplay (here are the first two). I hope that everyone had a happy Thanksgiving! We stayed at home this year, rather than drive down to Pennsylvania. Instead, my mother-in-law drove up for the holiday, and left this morning. I like the drive down to PA, but it was nice to stay here — I feel like I’ve been on the road a lot the last couple of months. The last couple of weeks have been filled with the usual day-job stuff, but I'm working on getting back to writing some fiction, escaping to the local library with some headphones to tap away. Very stereotypical, I know.

This issue is something that's been on my mind for a short while, and it's a bit on the longer side (sorry).

Conceptualizing the future

A couple of weeks ago, I reviewed Alec Nevala-Lee’s — dare I say it — astounding book on the history of Astounding Science Fiction and four of the men associated with it: John W. Campbell Jr., Isaac Asimov, Robert A. Heinlein and L. Ron Hubbard. It’s a good book, and if you have any interest in the history of the genre, or if you just like those authors, you should check it out.

In it, Nevala-Lee recounts a story that's been told many times over in the annals of genre lore: the creation of Asimov’s celebrated Three Laws of Robotics. In 1940, Asimov published a short story called “Robbie” in pulp magazine Super Science Stories, which follows a young girl named Gloria and her family robot, Robbie. The mother, concerned that Gloria is spending more time with the robot than her friends, returns it to the factory and replaces it with a dog. Gloria is despondent, and in an attempt to cheer her up, they take her to the city. There, she catches sight of her missing friend, and runs across the street to it. She’s almost hit by a car, and is saved by Robbie, whereupon the family realizes that they can’t give it up and return home with it.

Asimov wanted to continue to write about robots, and pitched another story to Campbell at Astounding. As the story goes, Campbell tells him:

“Look, Asimov, in working this out, you have to realize that there are three rules that robots have to follow,” and proceeded to list them off. These become the vaunted “Three Laws of Robotics.” For the uninitiated, they are:

- First Law - A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- Second Law - A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- Third Law - A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

(There's also a funky "Zeroth Law" that Asimov retconned in later to allow some more dramatic stories: A robot may not harm humanity, or, by inaction, allow humanity to come to harm.)

The laws are a logical loop designed to protect humans and are the benchmark of Asimov’s collection, I, Robot. This was the book I was hooked on in High School — I've read it dozens of times over the years, and I still have three copies kicking around the house. (As I write this, I realize that the mug I picked up this morning for tea is … an I, Robot one.) But the book wasn't just a hit with science fiction fans — it would become influential for roboticists as well. Prior to this, robots in fiction were largely depicted as antagonists in stories: menacing machines that were overcome by the brawn and quick thinking of the human heroes. I, Robot demonstrated that robots could be more than props in a story — the ins and outs of how they worked could be the story.

The reason for this is the philosophical angle that I, Robot takes. Each story is a logic puzzle that imagines the various ways in which Asimov's Three Laws of Robotics don’t work as planned. In it, he’s figuring out the bugs in the system, which would undoubtedly be forwarded over to a U.S. Robots and Mechanical Men worker, who would issue a software update to correct the issue.

Remember, Asimov was writing these stories in the late 1930s and 1940s, when a computer was a person who was making the calculations an electronic computer now does, way, way before the advent of the technological age we’re now in. This is where Asimov’s collection is particularly important, because he was thinking of how robotics and advanced technology would be used, and what some of the pitfalls were. The behavior that violates the Three Laws is essentially extreme exceptions to the rules, which cover a broad swath of behaviors. Robotics and artificial intelligence would have other, notable representations in mass media: look no further than HAL-9000 in 2001: A Space Odyssey, R2-D2 and C-3P0 in Star Wars, or The T-1000 in Terminator (which, incidentally, you can watch on YouTube for free), which alternatively show the downside to the cold logic of machine intelligence, and useful role in society.

I bring this up because it’s something that I’ve been thinking about recently in light of a couple of notable examples of technology run amok recently. The first is the latest scandal out of Silicon Valley — take your pick with the company, whether it be Amazon (Leaking user’s usernames and e-mails), Facebook (where to start?), Twitter (not removing harassing tweets from the Florida pipe-bomb suspect), and so forth. The other is the dueling headlines about the Camp Fire that devastated entire towns in California, and a newly-released report from the US government that says that the effects of climate change are going to make life worse for everyone, if significant and immediate steps aren’t taken… now.

Science fiction isn’t designed to be a predictive tool — it's entertainment. As P.W. Singer notes in his book Wired for War: The Robotics Revolution and Conflict in the 21st Century, these rules don't actually work in the real world; they're a plot device. We also have robots that actively break the first law.

But because of its emphasis on realism, people are drawn to science fiction because of what it's about. The stories miss the mark when it comes to predictions, but that's besides the point. Good science fiction, as Frederik Pohl once put it, "should be able to predict not the automobile but the traffic jam." Asimov's stories are interesting not because they've accurately predicted robots (we're still a long ways away from Asimov's vision of humanoid robotics), but the problems that come with technology. They’ve helped shape opinions of robotics and AI for decades. HAL and the Terminator serve as useful visuals for what we don’t want, while Asimov's positronic brain-powered robots imagines how we get around those problems. If either AI had been equipped with Asimov’s Three Laws, we wouldn’t be left with dead astronauts or a post apocalyptic world.

I recently moderated a panel on AI at a conference held by West Point’s Modern War Institute, and one topic that came up was the amount of autonomy that we’re comfortable with deploying on robotics — machine intelligence can recognize a variety of objects, and can carry out that task far more efficiently and with far more data than people can. But, there’s sizable — and justified — opposition to the idea that we’d allow robots to locate and take out targets themselves, without a person in the loop. Terminator and Asimov certainly play a role in shaping some of the ideology behind that.

But while there’s people who have thought long and hard about the ramifications of programming robots (informed in part by science fiction), there doesn’t seem to be anything that really has the same impact for the other big problems we face right now, namely climate change and the impact that major tech companies have on the populations that they "serve." Science fiction is an ideal medium for framing this conversation.

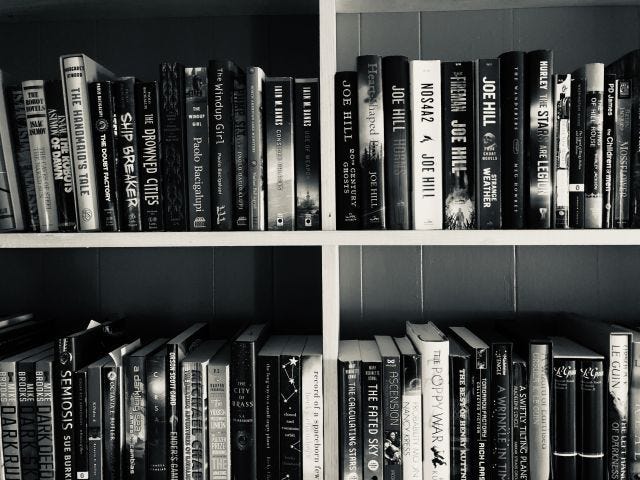

This isn’t to say that science fiction authors haven’t railed against capitalism or climate change and their respective effects on the world — there's been many over the decades. Paolo Bacigalupi’s short fiction and his novels The Windup Girl and The Water Knife put climate change front-and-center in the genre, while books like Iain M. Banks’ Culture series and Becky Chambers’ recent The Record of a Spaceborn Few explore some neat ideas when it comes to the excesses of capitalism. Some, like Kim Stanley Robinson’s New York 2140 and Ursula K. Le Guin’s The World For World is Forest, link the two: climate change is a result of a capitalist society, and that to fix the planet, you need to fix the global economy.

But while those books exist and are lauded as classics, I don't see anything with quite the footprint as Asimov's collection. Part of that is just the structure of the industry — Asimov is a huge name, and there aren't many people who have the same stature, even today. But I think there's room for and a need for such practical stories, and that the genre can do well by addressing topical issues — not by hiding behind the curtain of "it's just entertainment!" but by embracing the genre's history of solving problems. I don’t think stories hold all of the answers, and I don’t think that a quick fix to the problems that we’ll face is hidden somewhere in the pages of a book. Asimov didn't set out to write a collection of stories to warn future readers of potential dangers. But what he and Campbell did do was think through the problems that the technology in the stories would pose, and how people would react to that. I would recommend Eliot Peper's Cumulus, Linda Nagata's The Last Good Man, and Malka Older's Infomocracy, three recent books that are thinking about these things.

Science fiction prizes realism, and in order to remain relevant, genre authors need to keep topics like the effects of technological platforms, economic inequality, racial disparity, and climate change in the back of their minds as they write. SF can shape attitudes by thinking through the problems created by technological problems, and it can be a good guide for not what will happen, but what we can do next.

Certainly, companies like Facebook and Twitter could use fiction to try and think through some of the pitfalls with the technology they deploy, while the general public — especially legislators and community leaders — should pick up books like New York 2140, The Last Good Man and The Windup Girl to have what those stories can teach us sitting in the backs of their minds when they make decisions that will impact our future. As I noted back in August, science fiction is an ideal framework for readers, and future leaders to engage with the future, and to start to ask the questions that we’ll need to address. The future can go in any number of ways, and books and stories like I, Robot could be a valuable tool to help figure out which direction we need to take.

My reading list

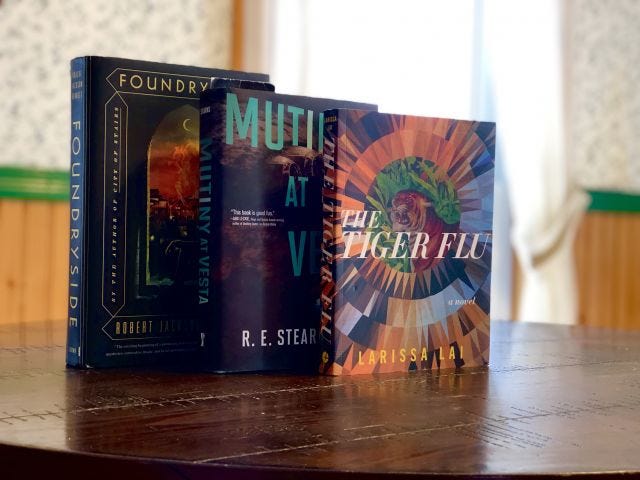

That was long, so I’ll keep this short. I’ve since finished James Lovegrove’s Big Damn Hero (my review is here), and I’m plugging away at Mutiny at Vesta by R.E. Stearns, Foundryside by Robert Jackson Bennett, The Fighters: Americans in Combat in Afghanistan and Iraq by C.J. Chivers. The Tiger Flu by Larissa Lai. On deck: Unholy Land by Lavie Tidhar, The Consuming Fire by John Scalzi, and Thin Air by Richard K. Morgan. I’ve also got a backlogged pile (Friday Black, Mage Against the Machine, Before She Sleeps, Severance, all just the tip of the iceberg) that I’ve been meaning to get to for this year.

I’ve also slacked off when it comes to reading short fiction, but I did pick up the August 2018 issue of Clarkesworld Magazine and read ‘The Veilonaut’s Dream’ by Henry Szabranski, which was a good story. It’s about a group of astronauts who travel through a portal that randomly opens up to another part of the universe, and occasionally, they get stuck on the other side. The main character, Mads, is obsessed with finding the planet that she glimpsed on her first trip, even though they’ve never found a pattern to where the portal opens up. I’ve got a growing stack of magazines that I need to read up on, and hopefully, I’ll have some new recommendations next issue.

I will be putting together a best-of-the-year list for The Verge later this month. I’d be interested in hearing what you read that you really liked (that was published in 2018) that you think I should consider including.

Upcoming Stuff

That thing I said would be debuting last issue? Yeah, that’s been delayed. Not sure when exactly it’ll be released, but soon! I hope! Other projects in the works… still in progress. Stay tuned.

That’s it for now, this has been long enough. Thanks for reading — I really do appreciate it, and I’m happy that people have enjoyed it thus far. As always, let me know what you think, and if you like what you’ve read, please pass it along, or post up the link online.

Andrew